Hi everyone. This is Mladen, Creative Director at Misfit Village, coming at you with our fourth devlog. As promised in the previous devlog, I’ll be talking about how we go about creating cutscenes and character animations for Go Home Annie.

Storyboarding

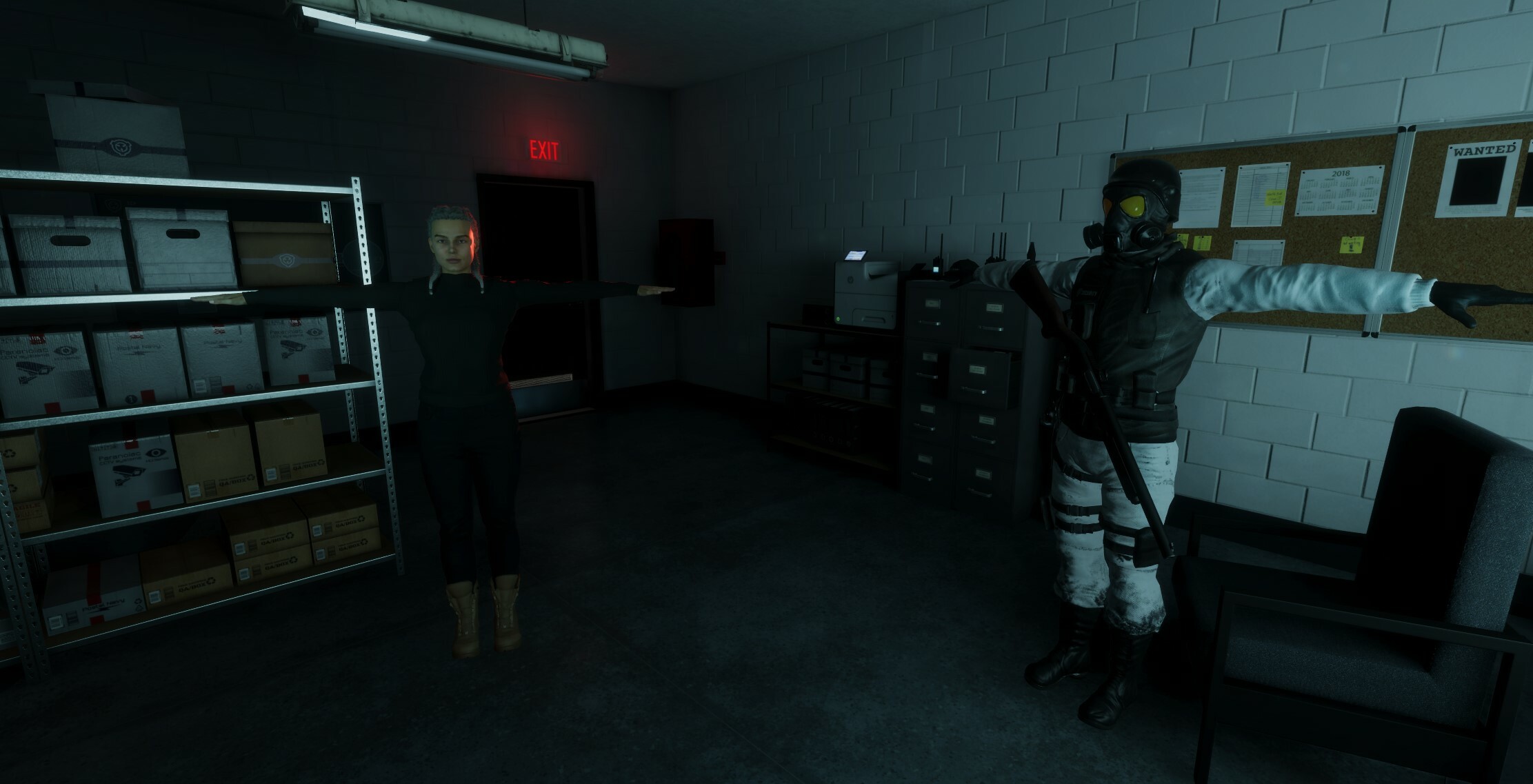

We create the levels, gameplay, and story at the same time, so these three elements often inform one another, giving us a sense of when a cutscene should start in the game and what it should tell the player. All cutscenes push the story and the player’s goals forward, but some are there to give the player a break from puzzles and exploration. After we have a rough idea of what should happen in a cutscene, we make an ugly, rough version of it with static characters and basic camera movement. A screenshot from a rough version of a cutscene. Don’t judge.

A screenshot from a rough version of a cutscene. Don’t judge.

All our cutscenes are seamlessly transitioned into from gameplay and are experienced from the player’s point of view. We never cut to a different camera. In that sense, Go Home Annie is one long take with no cuts, which makes the game flow beautifully.

Motion Capture

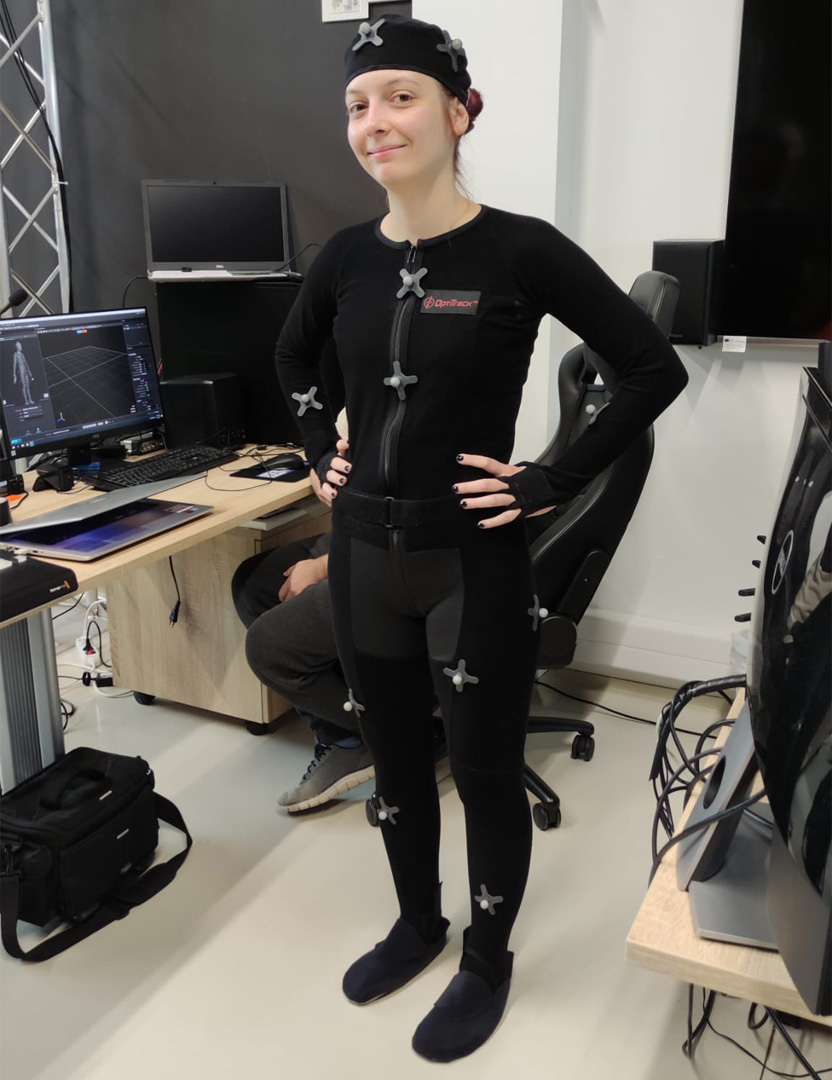

To breathe life into our characters, we use motion capture. You’ve probably seen footage of your favorite actors in spandex suits with little balls on them. Here’s us doing the same thing. Our 3D Artist, Mateja, doubling as a motion capture performer.

Our 3D Artist, Mateja, doubling as a motion capture performer.

We take advantage of the facilities and technology available to us at our incubator. Shout-out to PISMO, Novska, Croatia!

Mocap data is never perfect, so it requires a lot of manual cleanup. Also, recording finger movements from mocap never turns out well, so we do those by hand.

An example of a work-in-progress cutscene with raw motion-captured animations, no cleanup.

Camera Movement

We often record Annie’s point of view during motion capture by having one of our actors act out her movements.

But for all the short cutscenes where Annie just jumps through a window or opens a door, it’s not worth spending 3 hours putting on the mocap suit and calibrating everything.

That’s where Unity’s Live Capture system comes in. By using an iPhone with the Unity Virtual Camera app, we can transmit the phone’s movement directly into the engine. So I strap the phone to my head and start acting! Here’s me with a phone strapped to my head, acting out a scene. And that same scene inside the game.

Here’s me with a phone strapped to my head, acting out a scene. And that same scene inside the game.

Facial MovementWe experimented with lots of systems for facial capture. We tried Unity’s Face Capture app and various other automated systems, but it turned out the best option for our project was to do it manually with Lip Sync Pro, using the audio file created by the voice actor and a transcript of the words they’re saying. We can’t show you any facial animations yet, as they’re very much a work in progress (and would also spoil the story a bit).

Additional Animation

To make characters hold their weapons perfectly and look at the player dynamically, we use Unity’s Animation Rigging package.

Some secondary animation in the game is completely procedural, like the flappy skin on our deer friend here.

In giving all this attention to cutscenes and animation, we’re trying to go beyond what indie horror games usually do, hopefully making the end product a richer experience for you.

That’s it for this devlog. I’ll see you in the next one. Until then, take care!

Mladen Bošnjak

Creative Director at Misfit Village

Very cool and professional, never expected that phone driven player camera that is so cool, love this kind of devlog's.

Btw I remember your demo made on that Ogre based engine, your guys certainly came very far since then indeed.